I am an Engineer. I serve mankind by making dreams come true.

—Anonymous

Enterprise Solution Delivery

The Enterprise Solution Delivery competency describes how to apply Lean-Agile principles and practices to the specification, development, deployment, operation, and evolution of the world’s largest and most sophisticated software applications, networks, and cyber-physical systems.It is one of the seven core competencies of the Lean Enterprise, each of which is essential to achieving Business Agility. Each core competency is supported by a specific assessment, which enables the enterprise to assess their proficiency. These core competency assessments, along with recommended improvement opportunities, are available from the Measure and Grow article.

Building and evolving large, enterprise solutions is a monumental effort. Many such systems require hundreds or thousands of engineers to build them. Components and systems provided by different organizations must be integrated, and most are subject to significant regulatory and compliance constraints. Cyber-physical systems require a broad range of engineering disciplines and utilize hardware and other long lead-time items. They demand sophisticated, rigorous practices for engineering, operations, and evolution.

Why Enterprise Solution Delivery?

Humanity has always dreamed big; and scientists, engineers, and software developers then turn those big dreams into reality. That requires innovation, experimentation, and knowledge from diverse disciplines. Engineers and developers bring these innovations to life by defining and coordinating all the activities to successfully specify, design, test, deploy, operate, evolve, and decommission large, complex solutions. That includes:

- Requirements analysis

- Business capability definition

- Functional analysis and allocation

- System design and design synthesis

- Design alternatives and trade studies

- Modeling and simulation

- Building and testing components, systems, and systems of systems

- Compliance and verification and validation

- Deployment, monitoring, support, and system updates

Over the decades that these systems are operational, their purpose and mission evolve. That calls for new capabilities, technology upgrades, security patches, and other enhancements. As true ‘living systems,’ the activities above are never really ‘done’ because the system itself is never ‘complete.’

The DevOps movement has advanced best practices among significant software systems to better support frequent system upgrades through a Continuous Delivery (CD) Pipeline. Today, a range of innovations allow large, cyber-physical systems to leverage these practices to provide faster, and more continuous delivery of value, including:

- Programmable hardware

- 5G

- Over-the-air updates

- IoT

- Additive manufacturing

These practices have also changed the definitions, and even the goal, of ‘becoming operational.’ Systems are not simply deployed once, then merely ‘supported.’ Instead, they are released early and developed over time. Engineers build and validate the CD Pipeline as they build and validate the system since both are critical to the system’s success.

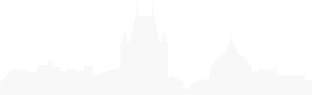

This competency describes nine best practices for applying Lean-Agile development to build and advance some of the world’s most vital and significant living systems. The nine practices are grouped into the three essential dimensions of enterprise solution delivery (Figure 1).

Lean Systems and Solution Engineering applies Lean-Agile practices to align and coordinate all the activities necessary to specify, architect, design, implement, test, deploy, evolve, and ultimately decommission these systems. Aspects of this dimension include:

- Continually refine the fixed/variable Solution Intent

- Apply multiple planning horizons

- Architect for scale, modularity, releasability, and serviceability

- Continually address compliance concerns

Coordinating Trains and Suppliers coordinates and aligns the extended, and often complex, set of value streams to a shared business and technology mission. It uses the coordinated Vision, Backlogs, and Roadmaps with common Program Increments (PI) and synchronization points. Aspects of this dimension include:

- Build and integrate solution components and capabilities with Agile Release Trains (ARTs) and Solution Trains

- Apply ‘continuish’ integration

- Manage the supply chain with systems of systems thinking

Continually Evolve Live Systems ensures large systems and their development pipeline support continuous delivery. Aspects of this dimension include:

- Build a Continuous Delivery Pipeline

- Evolve deployed systems

The remainder of this article describes these nine practices.

Continually Refine the Fixed/Variable Solution Intent

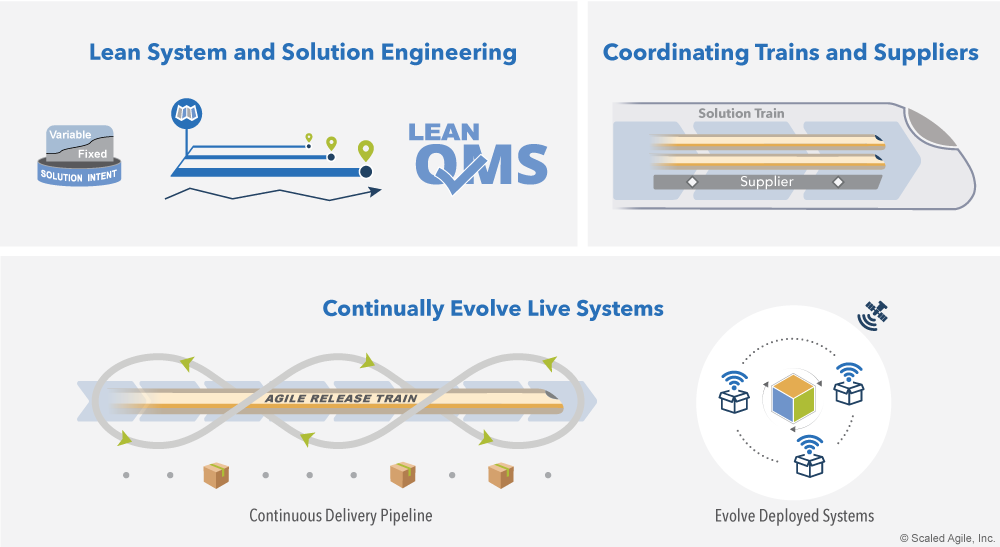

Systems engineering is a known discipline that traditionally has applied the familiar ‘V’ lifecycle model [1]. The ‘V’ model describes the critical activities for building large systems from inception through retirement. Flow-based systems like SAFe perform the same activities, but they occur in smaller batches continuously throughout the lifecycle (Figure 2).

This replaces the ‘V’ model with continuous and concurrent engineering. In addition, technology advances discussed earlier (over-the-air updates, programmable hardware) reduce the cost for changing these systems, tilting the economics toward preserving design options (SAFe Principle #3) and exploring alternatives via set-based design. They make flow-based systems like SAFe possible, allowing engineers to perform these activities continuously as part of the CD Pipeline. Every increment, engineers explore innovative ideas, refine future features, integrate and deploy previously refined features, and release value on demand as users or markets dictate.

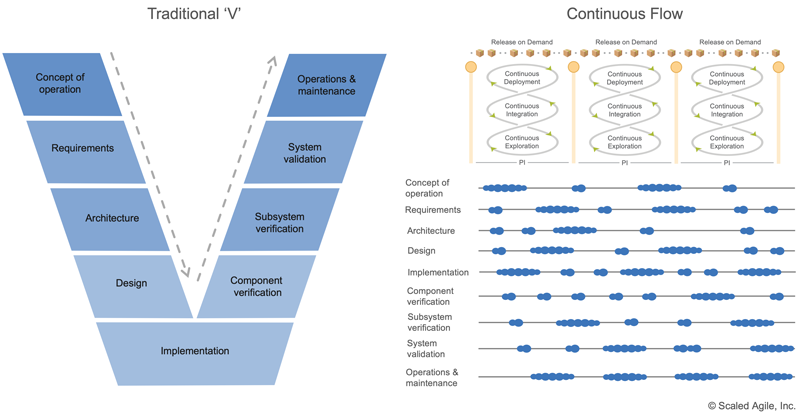

By managing and communicating a more flexible approach to the system’s current and intended structure and behavior, the fixed and variable Solution Intent aligns all system builders to a shared direction. Its companion, Solution Context, defines the system’s deployment, environmental, and operational constraints. Both should allow teams the implementation flexibility to make localized requirements and design decisions. For solutions of scale, solution intent and solution context flow down the value stream (Figure 3). As downstream subsystem and component teams implement decisions, the knowledge gained from their continuous exploration (CE), integration (CI), and deployment (CD) efforts provide feedback back upstream and move decisions from variable (undecided) to fixed (decided).

Apply Multiple Planning Horizons

Building massive and technologically innovative systems has significant uncertainty and risk. The traditional approach to managing this risk is to develop detailed, long-range plans. In practice, however, technical discoveries, gaps in specifications, and new understanding from customers can quickly unravel these comprehensive schedules. Instead, Agile teams and trains use backlogs and roadmaps to manage and forecast work. To ensure teams deliver the most value each increment, backlog items can be added, changed, removed, and reprioritized as new knowledge becomes available.

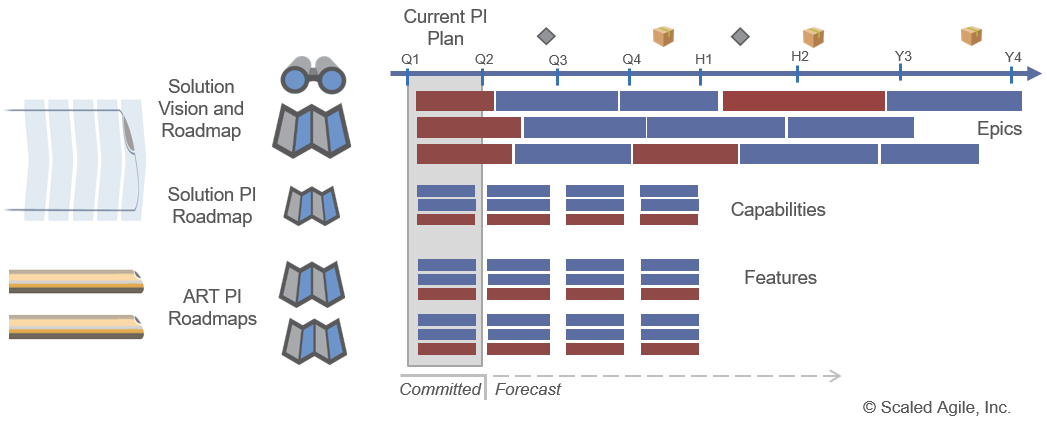

Roadmaps provide multiple planning horizons, allowing engineers to forecast, but not commit to, long-term work not yet well defined or understood, while committing to near-term work that is better defined. Figure 4 shows a multi-year solution roadmap of epics. PI Roadmaps decompose nearer-term epics into features and capabilities and plan them in increments. There is an always-current ‘PI Plan’ that represents the delivery commitments for the current PI.

Architect for Scale, Modularity, Releasability, and Serviceability

Architectural choices are critical economic decisions because they determine the effort and cost required for future changes. Software development can leverage frameworks and infrastructure that provide proven, architecture components out-of-box. Builders of large, cyber-physical systems must develop their own, applying intentional architecture and emergent design, which encourages collaboration between architects and teams. The goal is to create a resilient system that enables teams and trains to independently build, test, deploy—and even release—their parts of large solutions.

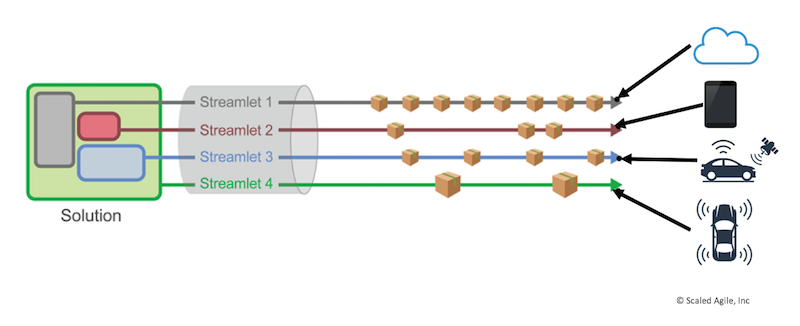

With the right architecture, elements of the system may be released independently, as Figure 5 autonomous delivery system example illustrates. Routing and delivery information are allocated to cloud services and support continuous updates. Vehicle control and navigation, running on onboard CPUs and FPGAs, can be updated over-the-air. Because they require taking the vehicle out of service and possible recertification, hardware sensors mounted on the chassis are updated rarely. Although these choices may lead to higher unit costs for more expensive hardware and vehicle communications, they allow continuous value delivery and can lower total ownership expense.

Continually Address Compliance Concerns

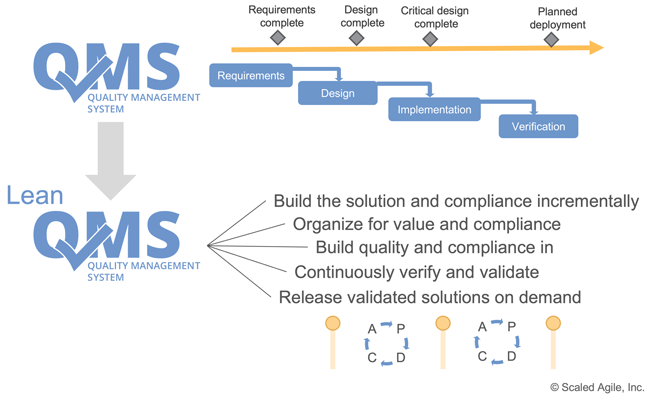

Simply stated, any large solution failure typically has unacceptable social or economic costs. To protect public safety, these systems must undergo routine regulatory oversight and satisfy various compliance requirements. To ensure quality and reduce risk, organizations rely on quality management systems (QMS) that dictate practices and procedures and confirm safety and efficacy. However, most QMS systems were created before Lean-Agile development. They were based on the conventional approaches that often assumed (or even mandated) early commitment to incomplete specifications and design, detailed work breakdown structures, and document-centric, phase-gate milestones. In contrast, a Lean QMS makes compliance activities part of the regular flow (Figure 6).

For more information on this topic, see the SAFe Advanced Topics article Achieving Regulatory and Industry Standard Compliance with SAFe.

Use Trains to Build and Integrate Solution Components and Capabilities

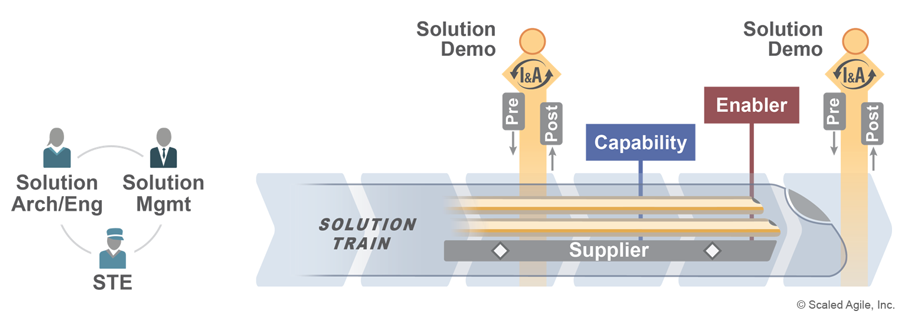

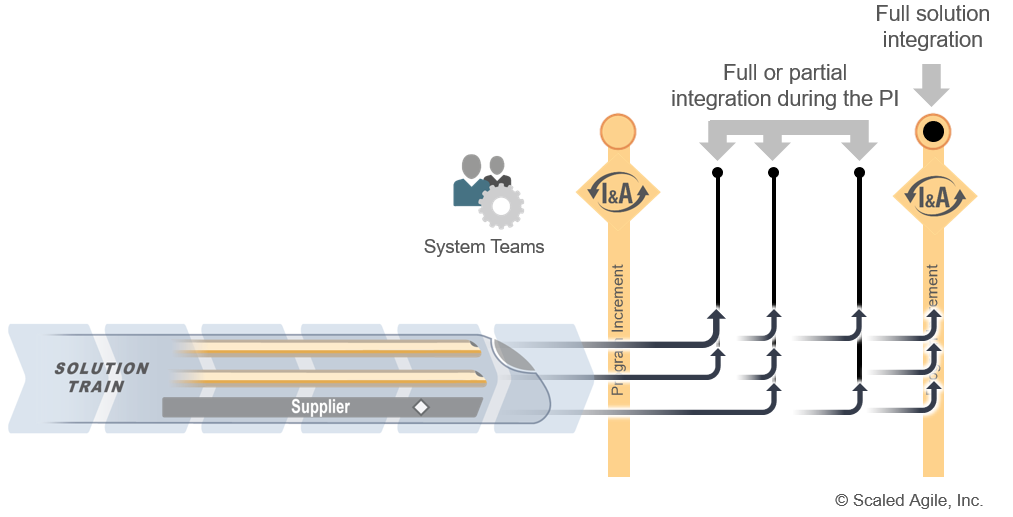

Systems are built by people. SAFe ARTs and Solution Trains define proven structures, patterns, and practices that coordinate and align developers and engineers to build, integrate, deploy, and release large systems. ARTs are optimized to align and coordinate significant groups of individuals (50-125 people) as a team of agile teams. Solution trains scale ARTs to very large solutions with hundreds of developers and suppliers (Figure 7).

As systems scale, alignment becomes critical. Systems miss deadlines a day at a time, and every delay on every team accumulates. To plan, present, learn, and improve together, trains align everyone on a regular cadence. They integrate their solutions at least every PI to validate that they are building the right thing and verify technical assumptions. Trains with components that have longer lead times (e.g., integrating packaged applications or developing hardware) still deliver incrementally through proxies (e.g., mockup, stub, prototype) that can integrate with the overall solution and support early validation and learning.

Apply ‘Continuish Integration’

In the software domain, continuous integration is the heartbeat of continuous delivery: It’s the forcing function that verifies changes and validates assumptions across the entire system. Agile teams invest in automation and infrastructure that builds, integrates, and tests every developer change and provides nearly immediate feedback on errors.

Large, cyber-physical systems are far more difficult to continuously integrate because:

- Long lead-time items may not be available

- Integration spans organizational boundaries

- Automation is rarely end-to-end

- The laws of physics dictate certain limits

Instead, ‘continuish integration’ addresses the economic tradeoffs of the transaction cost of integrating versus delayed knowledge and feedback. The goal is frequent partial integration with at least one full solution integration each PI (Figure 8).

When full integration is impractical, partial integration lessens risks. With partial integration, Agile teams and trains integrate and test in a smaller context and rely on the System Team for larger end-to-end tests with true production environments. A smaller context can mean testing a partial scenario or Use Case or testing with virtual/emulated environments, test doubles, mocks, simulations, and other models. This decreases the testing time and costs for teams and trains.

At scale, integration often leverages proxies. For example, integrating models allows earlier end-to-end systems integration, development boards can be wired together with breadboards, and significant IT components can be proxied with test doubles. Economics will determine how frequently solution builders need to update these proxies to fully evolve and test the system end-to-end fully.

Manage the supply chain with systems of systems thinking

Large system builders must integrate solutions across a substantial supply chain. Clearly, supplier alignment and coordination are critical to solution delivery. As a result, strategic suppliers should behave like trains and participate in SAFe events (planning, demos, I&A), use backlogs and roadmaps, adapt to changes, etc. Agile contracts should encourage this behavior.

To collaborate like a train, suppliers must support train-like roles. The supplier’s Product Manager and Architect continuously align the backlog, roadmap, and Architectural Runway with those of the overall solutions. Similarly, customer and supplier system teams share scripts and context to ensure that integration handoffs are smooth and free of delays.

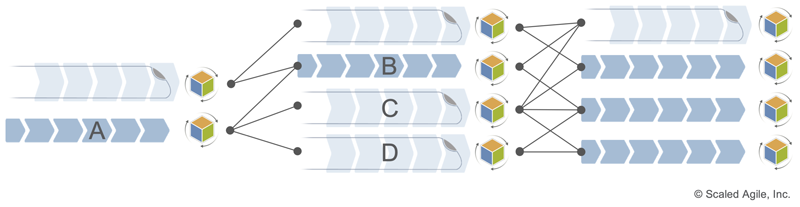

Supply chains can become complicated. Figure 9 shows a complex integration hierarchy where solutions in one context are part of a large solution in another. To balance the needs for trains B, C, and D, the product manager of train A must continually align backlogs and roadmaps. Adjustments made by one train can ripple across the extended supply chain.

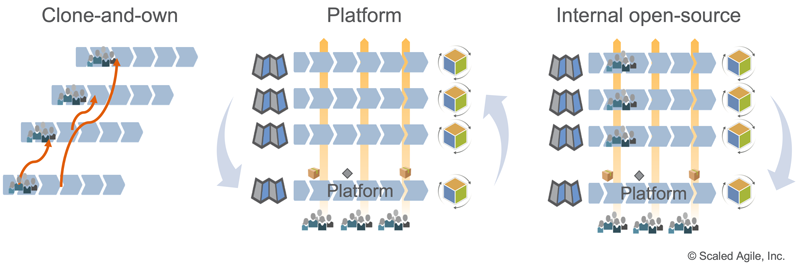

There are known patterns for coordinating supply chains (Figure 10). ‘Clone-and-own,’ a common technique, creates a new version for each customer. For example, in Figure 9, three versions of Solution A would supply B, C, and D with the work product they need to continue their development. Although this can speed up execution, it prevents economies of scale, often raising costs and lowering quality.

Instead, a ‘platform’ approach places all builders for Solution A on one value stream with aligned roadmaps. An ‘internal open-source model’ blends the two. By interacting directly with the platform team, or making changes themselves, dependent value streams can accelerate their delivery needs.

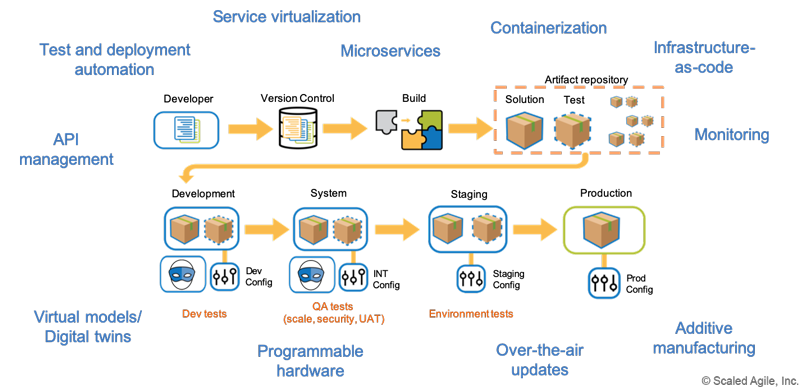

Build a Continuous Delivery Pipeline

Traditional large system development focuses on building the system right the first time and minimizing changes once the system is operational. Innovations require a significant system upgrade effort. However, systems always evolve. Figure 11 shows a typical CD Pipeline (see Built-in Quality for more details) that automatically takes small developer changes, builds them, and then tests them in successively richer environments. Many technologies enable the pipeline. While the software technologies are well-known and becoming standard, the cyber-physical community is just beginning to leverage emerging hardware technologies that enable the CD pipeline. Virtual environments support simulation for early and fast feedback.

Development on the CD pipeline begins at the same time system development begins and they evolve together. All the other practices in this article support its creation and use. For example:

- Systems engineering activities for analysis and design (continuous exploration) are performed in small batches to flow through the pipeline quickly

- Planning includes building the pipeline as well as the system

- Continuish integration creates the automation and environments that can flow changes through the pipeline

- Architecture leverages over-the-air updates and programmable hardware to enable deployment and release in the operational environment

Continuous delivery pipelines are well established in software development. Significant cyber-physical systems must also utilize them to support frequent releases of new functionality. This requires investing in automation and infrastructure that can build, test, integrate, and validate small developer changes on an end-to-end staging environment, or by using a close proxy. Most hardware can be represented in models (Computer-Aided Design) and code (Verilog, VHDL) that can already support continuous integration. For these systems, ‘end-to-end’ integration will likely begin with connected models that later evolve to physical proxies, and ultimately become production hardware.

Evolve deployed systems

Investment in the CD pipeline changes the economics for going live. A fast, economical CD pipeline means a minimum viable system can be released early and evolve. This allows enterprises to learn much earlier with less investment and possibly even begin generating revenue sooner. Updating live systems is not new. For example, satellites have been launched before the software was fully ready. The goal is to get into the operational environment quickly to learn, gain feedback, deliver value, and get to market before the competition.

Systems must be architected to support continuous deployment and release on demand. For faster and more economical part creation, hardware modeling languages, additive manufacturing, and robotic assembly can start enabling ‘hardware as code.’ Certain hardware design decisions (programmable vs. ASIC, connectors vs. solder) and the allocation of system responsibilities to upgradeable components enhance system evolution.

Engineers are also exploring ways to adopt well-known software DevOps practices. For example, blue-green deployment supports continuous deployment with two environments—one for staging and one for live operations. This approach is being used on large systems like ships, where the value of redundant hardware is offset by releasing new capability into the operational system years earlier and delaying the need to take the systems out of service for upgrades.

Summary

For a long time now, Agile has shown the benefits of delivering early and updated often to generate frequent feedback and develop solutions that delight customers. To stay competitive, organizations need to apply the same approach to larger and more complex systems, which often includes both cyber-physical and software components. Enterprise solution delivery builds on the advances that have been made in Lean systems engineering and the technologies that have emerged to provide a more flexible approach to the development, deployment, and operation of such systems.

Alignment and coordination of ARTs and suppliers is maintained by continually refining solution intent, aligning everyone to a shared direction, alongside roadmaps that cover multiple planning horizons. ‘Continuish integration’ balances the economic tradeoffs between frequent systems integration and delaying feedback.

Enterprise solution delivery also describes the necessary adaptations to create a CDP in a cyber-physical environment by leveraging simulation and virtualization. This competency also provides strategies for maintaining and updating these true ‘living systems’ to continually extend their life and thereby deliver higher value to end-users.

Learn More

[1] https://www.sebokwiki.org/wiki/System_Life_Cycle_Process_Models:_Vee [2] Oosterwal, Dantar P. The Lean Machine: How Harley-Davidson Drove Top-Line Growth and Profitability with Revolutionary Lean Product Development. Amacom, 2010. [3] Ward, Allen, and Sobek Durward. Lean Product and Process Development. Lean Enterprise Institute, 2014. [4] Reinertsen, Don. Principles of Product Development Flow: Second Generation Lean Product Development. Celeritas Publishing, 2009. [5] Forsgren, Nicole, Jez Humble, and Gene Kim. Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations. 2018.

Last update: 10 February 2021